Explore our expert-made templates & start with the right one for you.

Using Primary Keys to Insert, Update and Delete Rows in Data Lake Tables

Primary Keys Help Make a Data Lake Act More Like a Database

Primary keys are a database concept. They’re used to uniquely identify records in a table. With Upsolver SQLake, you can define primary keys for tables in your data lake. This enables you to insert, update, and delete records in the data lake just as you would in a traditional database – something that up until now has been notoriously difficult.

When should you use a primary key?

If you want to have only one record for a given data attribute (or set of attributes) – that is, there should not be any duplicate records in the table for those attribute(s) – then define a PRIMARY KEY. SQLake uses the primary key to match records between your source and target tables and will UPSERT data in the target table for any matching records. For example, you would create the Orders table with a PRIMARY KEY if it’s required that you have only the latest order status, such as “in-progress,” “payment-processed,” and “purchase-complete.” As new order events arrive, SQLake matches the primary key and updates the existing record with the new value.

When should you not use a primary key?

If the table is APPEND only – that is, you want to retain every event/record that arrives because you want access to the entire event history – then you do not define a PRIMARY KEY when creating a table in SQLake. For example, you would not define a PRIMARY KEY if the Orders table is required to capture all the order status events that arrive. This is helpful if you need to replay the processing events to correct errors in the data or enrich the events.

Note: Only define PRIMARY KEY using the attributes already present in the data. Do not use any auto-generated attribute or sequence number.

SQLake Syntax to add PRIMARY KEY

There are two ways to create tables in SQLake:

- Create a table by including a list of columns and data types, schema, that SQLake will use to insert records into the table.

- Create a table without including a list of columns and types, leaving the column list empty. When the column list is empty, SQLake automatically detects the schema and updates the AWS Glue Data Catalog with column names, data types and partition information.

In both cases, if a primary key is required, you must define the key column name in the column list as shown below:

CREATE TABLE sales_catalog.salesdb.orders_info ( order_id string ) PRIMARY KEY order_id;

Similarly you can create a table with multiple columns as primary keys:

CREATE TABLE sales_catalog.salesdb.orders_info (

order_id string,

order_date string

)

PRIMARY KEY order_id, order_date;

Using SQLake with PRIMARY KEY

This involves 6 steps:

- Create a staging table

- Create an ingest job to copy raw events into the staging table

- Create a target table with PRIMARY KEY

- Create a transformation job to load the target table

- Query the staging table (has duplicate events per key)

- Query the target table (has only 1 event per key)

Let’s assume your Connection, Catalog, and Database names are defined as follows:

Connection Name for s3 bucket: upsolver_s3_samples

Catalog name: default_glue_catalog

Database name: database_0297c0

1. Create a staging table

CREATE TABLE default_glue_catalog.database_0297c0.staged_orders_info () PARTITIONED BY $event_date;

2. Create an ingest job to copy raw events into the staging table

CREATE SYNC JOB load_orders_raw_data_from_s3

CONTENT_TYPE = CSV

AS COPY FROM S3 upsolver_s3_samples

BUCKET = 'upsolver-samples'

PREFIX = 'orders_with_duplicates/'

INTO default_glue_catalog.database_0297c0.staged_orders_info;

This job load_orders_raw_data_from_s3 reads the source data and inserts it into the data lake table staged_orders_info unchanged. It’s a copy of the source data. This is an append-only table that retains all events.

3. Create a target table with PRIMARY KEY

CREATE TABLE default_glue_catalog.database_0297c0.orders_info_pk_id (

order_id string,

partition_date date

)

PRIMARY KEY order_id

PARTITIONED BY partition_date;

4. Create a transformation job to load the target table

CREATE SYNC JOB load_staged_orders_to_order_pk_id

START_FROM = BEGINNING

ADD_MISSING_COLUMNS = TRUE

RUN_INTERVAL = 1 MINUTE

AS INSERT INTO default_glue_catalog.database_0297c0.orders_info_pk_id MAP_COLUMNS_BY_NAME

SELECT

orderid AS order_id,

orderdate AS order_date,

nettotal AS total,

orderstatus AS order_status,

$commit_time AS partition_date

FROM default_glue_catalog.database_0297c0.staged_orders_info

WHERE $event_time BETWEEN run_start_time() AND run_end_time();

Note that no special syntax (such as MERGE WHEN) is required to Upsert rows based on a primary key. SQLake already knows a primary key is defined and automatically inserts new rows and updates existing rows based on this key column.

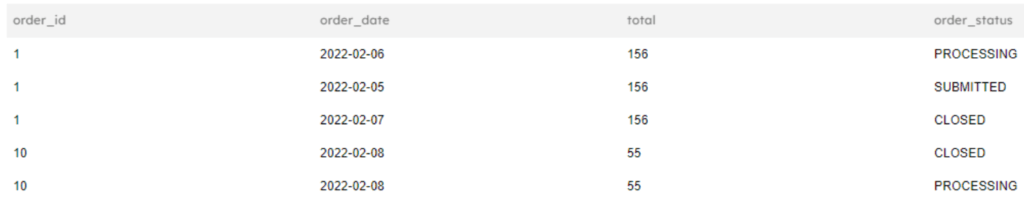

5. Query the staging table (with duplicates)

SELECT orderid AS order_id,

orderdate AS order_date,

nettotal AS total,

orderstatus AS order_status

FROM default_glue_catalog.database_0297c0.staged_orders_info

ORDER BY orderid

LIMIT 10;

When you query the raw table it’s easy to see duplicates. That’s okay – we want to keep all events generated in case we need to audit or replay the data.

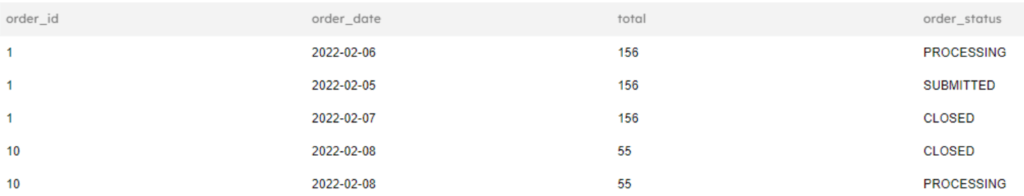

6. Query the target table (no duplicates)

SELECT order_id,

order_date,

total,

order_status

FROM default_glue_catalog.database_0297c0.orders_info_pk_id

ORDER BY orderid

LIMIT 10;

When you query the final target table, you will see it was updated with the latest event attributes and only one row per order_id is present. SQLake automatically updated the rows based on the primary key and there are no duplicates in the table.

Summary

Defining primary keys on your data lake tables enables SQLake to automatically insert, update, and delete records. You simply define a primary key on the target data lake table. When you create a job that writes into a data lake table with a primary key, SQLake knows how to insert and update rows (upsert) without requiring you to perform any additional configuration or maintenance.

Get started today for free with our sample data, or bring your own.

Discover how companies like yours use Upsolver

Data preparation for Amazon Athena.

Browsi replaced Spark, Lambda, and EMR with Upsolver’s self-service data integration.

Read case studyBuilding a multi-purpose data lake.

ironSource operationalizes petabyte-scale streaming data.

Read case studyDatabase replication and CDC.

Peer39 chose Upsolver over Databricks to migrate from Netezza to the Cloud.

Read case studyReal-time machine learning.

Bigabid chose Upsolver Lookup Tables over Redis and DynamoDB for low-latency data serving.

Read case study

Start for free - No credit card required

Batch and streaming pipelines.

Accelerate data lake queries

Real-time ETL for cloud data warehouse

Build real-time data products