Explore our expert-made templates & start with the right one for you.

An Introduction to Polaris Catalog for Apache Iceberg

Open-source file and table formats are of great interest to data teams due to their inherent interoperability and low total cost of ownership. Just last summer (June 2024), both Databricks and Snowflake rolled out enhancements to their engines to enable interoperability with Apache Iceberg.

There’s a good reason for this. Interoperability and data portability make it possible to create and access data wherever it is, cost effectively. Interoperability also minimizes key issues organizations face, like high costs and duplicated data, when delivering use cases that require accessing data using different tools.

Despite the widespread adoption of open table formats, however, limitations still exist when it comes to integrations between query engines and data catalogs. These limitations lead to vendor lock-in situations and detract from the goal of open standards. In particular, the catalog creates a critical decision point in the data creation and query paths that controls access to data.

To level the playing field and accelerate interoperability across engines, the Iceberg community developed and promoted an open standard based RESTful APIs for communicating changes to Iceberg tables. This opens the door for new data catalog implementations—and Apache (Incubating) Polaris Catalog is one of the first complete implementations to be released to open source.

What is the Polaris Catalog?

Apache (Incubating) Polaris Catalog, created and open-sourced by Snowflake is a technical catalog to manage Apache Iceberg tables. Based on the Iceberg REST API , Polaris Catalog makes it easy to create, update and manage Iceberg tables in cloud object stores, enabling centralized, secure access to Iceberg tables across compatible query engines such as Apache Spark, Presto and Trino.

Unlike existing catalog solutions that are tightly integrated with specific platforms—for example, Unity Catalog for Databricks—the Polaris Catalog enables data teams to interoperate many engines on a single copy of data. Data teams can use already familiar tools like Snowflake and Databricks to discover, secure, read from and write to Iceberg tables with Polaris Catalog.

Benefits of Polaris Catalog for data teams

Unified access across engines

As we’ve already mentioned, Polaris Catalog supports multi-engine interoperability, allowing data teams and users to access and manage Iceberg tables using any query engine that supports the Iceberg REST API.

With compatible engines, Iceberg read and write requests are routed through the Polaris Catalog. This allows teams with diverse requirements to independently query and update tables. Iceberg table format and Polaris Catalog provide ACID guarantees and ensures consistent results for all users.

Vendor neutrality

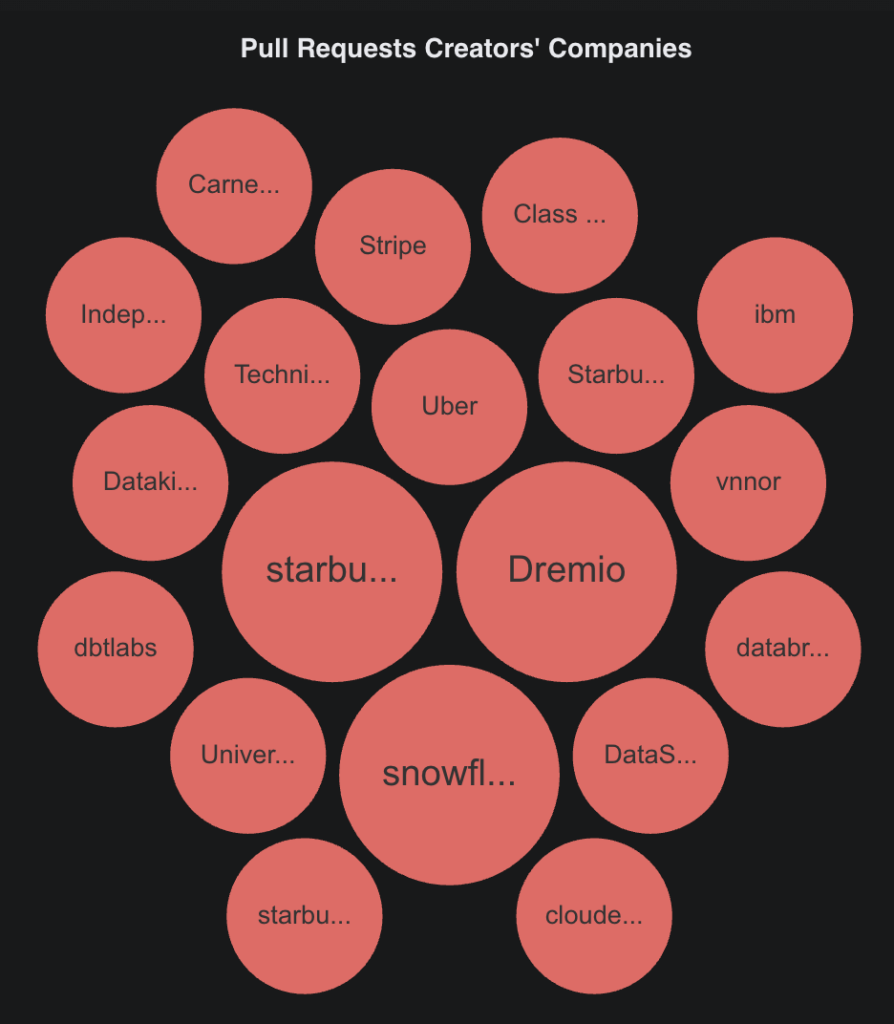

Although initially created and promoted by Snowflake, Polaris Catalog is developed and maintained by a growing number of contributors from diverse companies like Stripe, IBM, Uber, Starburst and others.

Pull Request creators for Apache/Polaris project by OSS Insights

Polaris has been purposely designed to be vendor-neutral and avoid lock-in by allowing data to be stored, secured and accessed regardless of the underlying catalog or table storage infrastructure or where it’s hosted. Data teams can run Polaris Catalog on their infrastructure of choice—either fully hosted on Snowflake’s AI Data Cloud infrastructure or by self-hosting in their own environment using Kubernetes or alternative compute option.

Built on Snowflake’s proven governance features

Polaris Catalog comes with built-in RBAC to manage and control access to tables. Following Snowflake’s design principles of simplicity, Polaris Catalog RBAC is simple and easy to manage, provides catalog, schema and table level granularity and works out of the box with several popular query engines.

Enabling RBAC with Polaris Catalog decouples policy management and storage from any individual vendor solution. This makes access policies portable across platforms and simpler to adopt new query tools without the need to recreate security policies directly inside each tool. Goodbye long, costly migrations.

Catalog federation

Polaris Catalog offers support for both internal and external catalogs. Internal catalogs are managed by Polaris, allowing for read and write operations, while external catalogs are managed by other providers (e.g., Snowflake, Dremio) and are read-only within Polaris Catalog. Supporting these modes enables a hybrid deployment and a gradual migration to a full lakehouse architecture. A common scenario starts with Iceberg tables being fully managed by Snowflake and over time, migrating them to externally managed tables – for example, ingesting and optimizing using Upsolver; query with Snowflake. The approach offers flexibility, cost saving and minimizes vendor lock-in at your own pace.

What does this mean for Snowflake users?

While Snowflake simplifies the processing, sharing, and analysis of large datasets for analytics and BI, teams with advanced data engineering requirements, streaming analytics and AI projects need to supplement this with various tools. Each tool requires its own copy of the data, separate access policies and enforcement and a non-uniform mechanism to discover datasets. This would normally mean copying data from Snowflake to an object store before loading it into a chosen tool, manually creating roles and policies and updating the tool’s own catalog to enable discovery and search. This is a time-consuming process that leads to increased storage costs, privacy risks, consistency challenges, and vendor lock-in across multiple vendors.

Upsolver’s integration with Polaris Catalog, Snowflake users can now store a single copy of their data in Amazon S3 using Iceberg table format. These tables are registered in the Polaris Catalog, enabling seamless querying from Snowflake and other engines like Apache Spark, Trino, Presto, Starburst, Amazon Athena, Amazon Redshift, Dremio and other Iceberg compatible engines.

Upsolver streamlines this process by allowing users to configure their data lake and manage ingestion tasks in one place. The platform continuously merges, optimizes, and maintains Iceberg tables automatically, ensuring consistent performance across engines your users depend on. Data is auditable, appropriate retention and cleanup are enforced and storage costs are continuously optimized.

Deploy and operationalize an Iceberg lakehouse in minutes, without data engineers.

Upsolver is the go-to vendor for data and analytics teams that want to implement Iceberg for managing their large-scale data lakes or lakehouses. As an official partner of Snowflake, Upsolver customers can continuously ingest files, streams, and CDC events at scale into Iceberg tables cataloged and secured by Polaris Catalog — all at a fraction of the cost of using Fivetran or similar ELT solution.

Together, Upsolver and Polaris Catalog allow companies to create a unified lakehouse that provides governed access to all of their data from popular analytics and AI engines enabling collaboration and accelerating innovation. All in all, Upsolver is by far the best way to use Iceberg with Snowflake.

Find out more about getting started with Upsolver and Polaris Catalog or get in touch to schedule a demo with one of our data engineers.